Having worked both as programmer and senior QA (slash Software Safety), I have a love-hate relationship with TDD and Unit tests. Love because they are superb tools to help developers write much better code… And hate because the volume and damage of ridiculous misconceptions, misunderstandings and overblown assumptions regarding how the TDD/UT can magically replace proper SDLC and QC/QA is worrisome.

Let’s start with the good parts and explore seldom explained purpose and neuroscience behind TDD/UT.

Already in the 1980s, IBM has discovered that when developers write code which they then hand-off to someone else, such code is often of low quality. But if the same developers first write their own small tests and only then write their code until it passes those tests, they deliver much better code. This practice became known as Test-Driven Development” and Unit Tests, respectively.

Why is that? It’s simple matter of psychology and brain architecture.

When the programmer just writes code and then throws it on someone else to deal with it, the human subconsciousness is a beast: since work is pain, and coding is work, the natural tendency is to delegate the work on someone else whenever possible.

“I will do the bare minimum and then SOMEONE ELSE tell me what to improve” is a sentence which noone ever says out loud or even admits, but the little devil with pitchfork hovering somewhere behind the left ear is whispering it in a soothing voice.

The price, off course, is time elapsed on development – in real world, every code artifact has to pass Code Review, then QA testing, then Integration testing, then be packed and deployed. Then it often (in enterprise SW like banks etc.) needs to wait for the customers to install it on their TEST systems and run their customer tests, and then wait – often for weeks or months – until the customer has maintenance window to push the new and thoroughly tested code onto their PROD systems.

If you write Unit Tests, it makes you think of the edge cases

The fundamental error of any programmer is to claim that their code is correct because it works for them. If your code works with your data, you have only proven single thing: your code will work as long as everyone ever always provides the exact same input as you just used.

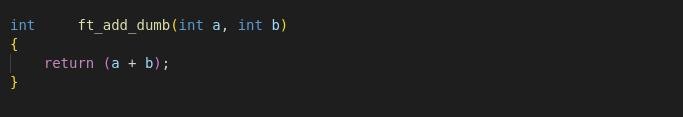

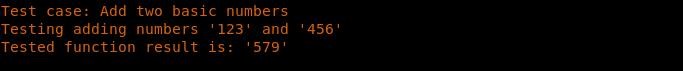

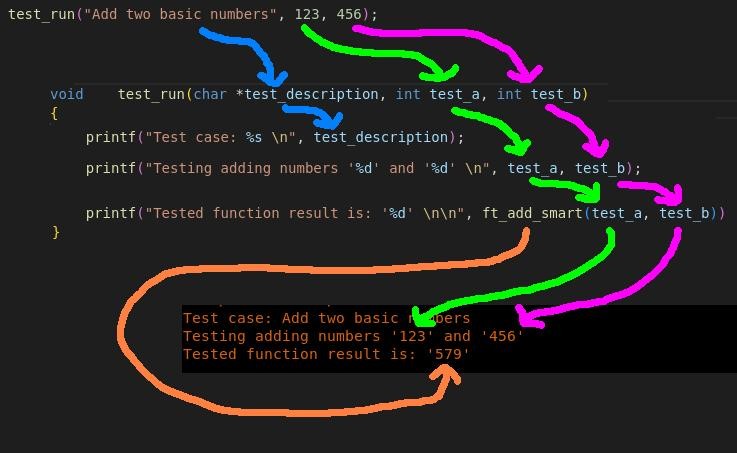

If you code a function to add two numbers, 2 + 2 will probably pass. Because that’s the simple, straightforward, baseline scenario everyone and their parrot can think of:

But the problem in SW Development are the edge cases.

- The boundary values such as INT_MAX + INT_MAX, which translates to 2147483647+2147483647. Oops! You got an overflow and crash right there!

- The invalid values – you expect to add two integers, but somehow, you instead get a decimal 123.54 on the input.

- Or the correct by nature, but incorrect by format inputs – eg. “six + two” as string literals instead of the numeric format.

There is much more to this, and there are experts whose full-time day job is to discover the ugliest possible edge cases and make sure your code survives them. But see above – they don’t have time for the kiddie stuff. That is the developer’s responsibility, and TDD works wonders for this.

The neuroscience behind TDD: Convergent and Divergent thinking in the scope of SW development

What happens if you accept the task of writing Unit Tests up-front, before you start to code? Your brain starts to work in a different mode.

Normally, when you code, you are using convergent thinking (how to implement single outcome). That puts artificial blindfolds on your mental eyes, so you will simply not see anything left or right of the single path which you selected in your sub-consciousness.

But when you write tests, it switches a lever. Your brain will start using divergent thinking (what if). Divergent thinking isn’t preoccupied with single path – it spams as many possible paths as possible. (For more details on convergent vs. divergent thinking applied to SW DEV, see my old AQA:ATAT course slides, pages 25 thru 27).

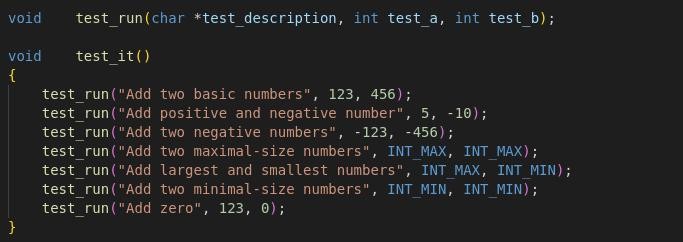

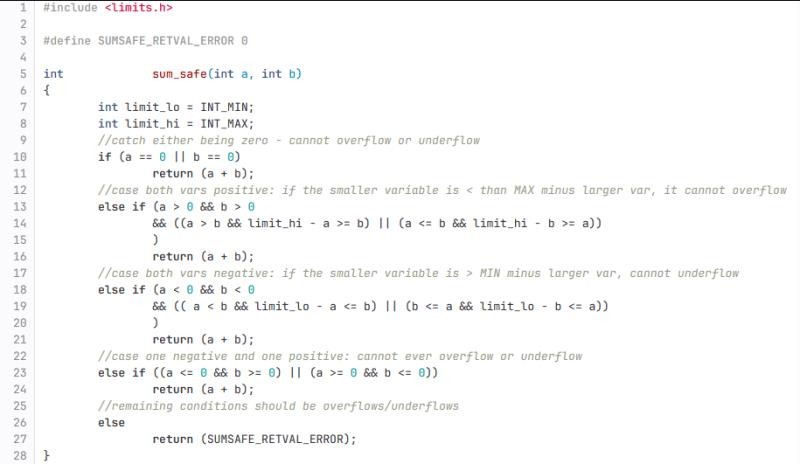

Thus when you start writing the Unit tests up-front, before you start coding… Thanks to the Divergent thinking, you will think of the basic edge cases like boundary values, negative values, empty string literals, NULL values, incorrect format etc., which you would have ignored or forgotten of during the Convergent thinking coding.

Thanks to all this, when you finally start to code, you will already know that your job isn’t as simple as “add two numbers”.

Your job is actually to “add two numbers proven to be integers, of non-zero length, within valid range.” Aha! Much different task, isn’t it?

As a consequence, when you then write the code for the exact same function, you can now write appropriate code checks which you’d otherwise completely forget. Your function could be much safer, reliable and less likely to cause a defect in the final integrated system. An overblown example from my personal fun sandbox:

The dangerous caveat: Unit tests versus Integrated system tests

In SW development, a “unit” is an abstract name for the smallest code artifact which can work on it’s own, i.e. do something measurable. Typically, it’s a C function. But it can be an entire C module (file with many functions), subprogram, class or even program to-be-integrated with other programs within some kind of data pipeline.

The important defining property is that such “unit” is typically useless on it’s own, without being integrated. Eg. a C function to add two numbers is useless on it’s own – there is no way for the user to even access it. Only when it’s integrated into a calculator app as one of it’s parts, then it becomes useful. And here comes the catch.

I have argued with a lot of high-ranking people who claimed that

“Sum of all Unit tests can replace integration testing – after all, it has 100% code coverage!”

No, no, no, just no! The are two basic objective, fundamental flaws to this dangerous idea:

- Integration and handling of error states and results

- Edge cases which Code Coverage always misses by definition

Let’s address them both.

System Integration is far more than just linking units of code

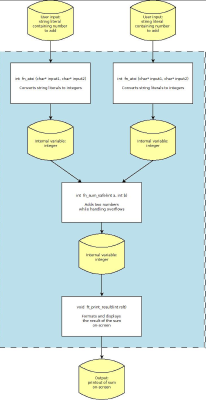

Let’s demonstrate the simplest system integration imaginable: just three heavenly perfect, ideal, absolutely flawless C functions:

- First function twice reads user input from the console (character literals resp. string literal) and converts them to two integer-type numbers.

- Second function adds these two numbers and returns the result of the sum.

- Third function prints the result of the sum to the screen.

This is the diagram which visualizes the flow and the boundaries between internal functions, integrated system, and external inputs/outputs:

We’ve said that all of the functions within the program are flawless. That means that they magically handle all exceptions and errors… And that’s a challenge for integration.

The 2nd function expects a valid number on it’s input. What happens when the 1st function, fn_atoi, detects an erroneous user input? This is a huge technical challenge (on which I have a masive WIP article in the works). Does the next function understand that error signal? Does it even accept, read, understand error signals on input?

Was that a requirement? Was it implemented?

Wouldn’t it actually mistake an error/exception signal for a valid data, which it then tries to crunch with devastating results – exactly like in the case of Ariane flight V88, which caused over $300M damages?

And if it has implemented crunching of error signals, is that implementation compatible with the actual signal of the 1st function?

Furthermore, what happens if the 1st function fails completely? Since it’s flawless, it will throw a nice, civilized exception instead of crashing to desktop, ABENDing, BSODing, SEGFAULTing etc. But how will the rest of the integrated program handle such exception?

The same happens everywhere there is some interface between subsystems or units of code, through which any data flow. Because that data can either be correct, which is the assumption everyone works with… Or it could be in-error, or it could be a error signal, or exception thrown, etc.

And once we progress from this simplest case to more complex integrated systems, the complexity of the integration grows almost exponentially. That’s why there is a specific expertise called “System Integrator”, which costs millions of dollars. The whole is more than the sum of it’s parts – so forget that “sum of unit tests” idea once and for all!

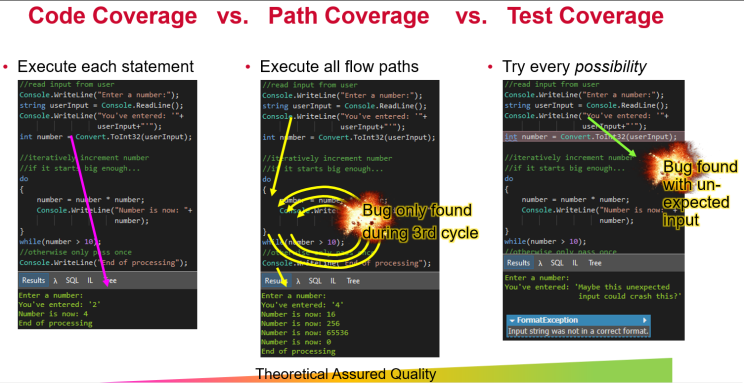

Even 100% Code Coverage would miss over 50% of possible defects

The Code Coverage metric is universally adored in the software industry – but what does it actually say? That each line of code was executed once. Raise your hand if you think that is enough. Having second thoughts? That’s wise.

Following is a super-simple, but super-powerful practical demonstration of the massive problem lurking beneath Code Coverage, this time on C#.NET code:

When you hear “software defect”, most people think of a written incorrect code. But more often than not, the true root cause isn’t bad code – it’s missing code.

Missing code which would’ve been needed to handle some exception, missing code to handle unexpected data, missing code to detect and handle overflows, missing code to integrate complex relationships, missing code to handle unexpected external influence…

And here comes the fundamental problem: no number of Unit Tests in the world can catch that.

Unit Tests are essentially a glorified developer’s personal TODO: list.

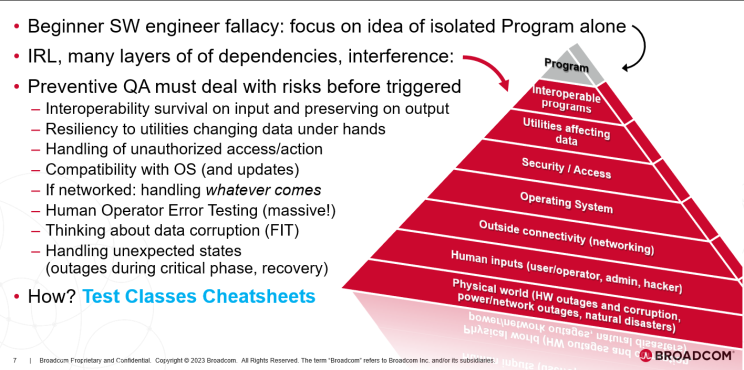

But to catch all these possibilities and make sure the code either handles them, or is found lacking and repaired to handle them… You need massive coverage beyond the Unit Tests scope. Because the product you’re developing, even integrated from it’s inner units, is just the tip of the iceberg helplessly exposed to countless unexpected influences:

You need expertise of a completely different field than the developer’s. You need professional QC/QA experts, who are taught, trained and experienced in finding these edge cases, unexpected influences and error conditions.

You need Integration testing, Data flow testing and System testing.

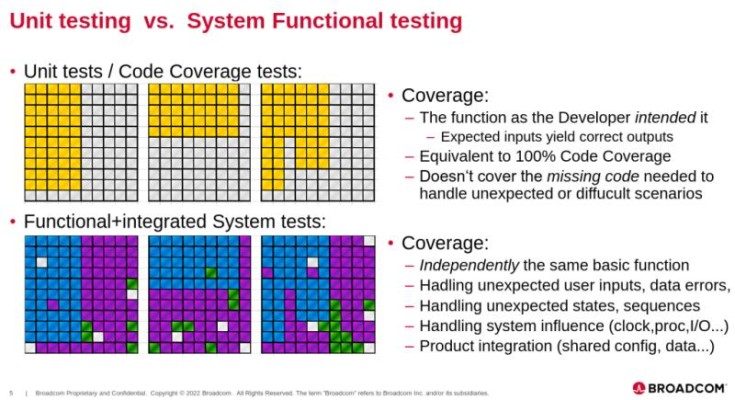

And that’s why although Unit Tests and TDD are great, they can never, ever, replace professional Integrated System Testing. Not even a fraction of it. To further drive this home, the final illustrative visualization:

To conclude: use Unit Tests. Support Test-Driven Development. They have big merits and they work wonders to deliver better code.

But if anyone ever tells you that TDD or Unit Tests replace System testing with professional QAs in any capacity… Now you know for a fact they have absolutely no idea what they’re talking about.

Leave a Reply

You must be logged in to post a comment.